We need to talk about our AI fetish

To allow those developing AI to lead the debate about its future is an error we may not get a chance to fix

Artificial intelligence puts us in a bind that in some ways is quite new. It’s the first serious challenge to the ideas underpinning the modern state: governance, social and mental health, a balance between capitalism and protecting the individual, the extent of cooperation, collaboration and commerce with other states.

How can we address and wrestle with an amorphous technology that has not defined itself, even as it runs rampant through increasing facets of our lives? I don’t have the answer to that but I do know what we shouldn’t do.

The streets

But in other ways we have been here before, making the same mistakes. Only this time it might not be reversible.

Back in the 1920s, the idea of a street was not fixed. People “regarded the city street as a public space,open to anyone who did not endanger or obstruct other users”, in the words of Peter Norton, author of a paper called ‘Street Rivals’ that later became a book, ‘Fighting Traffic.’ Already, however, who took precedence was already becoming a loaded — and increasingly bloody — issue. ‘Joy riders’ took on ‘jay walkers’, and judges would usually side with pedestrians in lawsuits. Motorist associations and the car industry lobbied hard to remove pedestrians from streets and for the construction of more vehicle-only thoroughfares. The biggest and fastest technology won — for a century.

Only in recent years has there been any concentrated effort to reverse this, with the rise of ‘complete streets’, bicycle and pedestrian infrastructure, woonerfs and traffic calming. Technology is involved — electric micro-mobility provides more options for how people move about without involving cars, improved VR and AR helps designers better visualise what these spaces would look like to the user, modular and prefabricated street design elements and the adoption of thinking such as ‘tactical urbanism’ allows local communities to modify and adapt their landscape in short-term increments.

We are getting there slowly. We are reversing a fetish for the car, with its fast, independent mobility, ending the dominance of a technology over the needs and desires of those who inhabit the landscape. This is not easy to do, and it’s taken changes to our planet’s climate, a pandemic, and the deaths of tens of millions of people in traffic accidents (3.6 million in the U.S. since 1899). If we had better understood the implications of the first automobile technology, perhaps we could have made better decisions.

Let’s not make the same mistake with AI.

Not easy with AI

We have failed to make the right choices because we let the market decide. And by market here, we mean as much those standing to make money from it, as consumers. We’re driven along, like armies on train schedules

Admittedly, it’s not easy to assess the implications of a complex technology like AI if you’re not an expert in it, so we tend to listen to the experts. But listening to the experts should tell you all you need to know about the enormity of the commitment we’re making, and how they see the future of AI. And how they’re most definitely not the people we should be listening to.

First off, the size and impact of AI has already created huge distortions in the world, redirecting massive resources in a twin battle of commercial and nationalist competition.

- Nvidia is now the third largest company in the world entirely because its specialised chips account for more than 70 percent of AI chip sales.

- The U.S. has just announced it will provide rival chip maker Intel with $2o billion in grants and loans to boost the country’s position in AI.

- Memory-maker Micro has mostly run out of high-bandwidth memory (HBM) stocks because of the chips usage in AI — one customer paid $600 million up-front to lock in supply, according to a story by Stack.

- Data centres are rapidly converting themselves as into ‘AI data centres’, according to a ‘State of the Data Center’ report by the industry’s professional association AFCOM.

- Back in January the International Energy Agency forecast that data centres may more than double their electrical consumption by 2026. (Source: Sandra MacGregor, Data Center Knowledge)

- AI is sucking up all the payroll: Those tech workers who don’t have AI skills are finding fewer roles and lower salaries — or their jobs disappearing entirely to automation and AI.. (Source: Belle Lin at WSJ

- China may be behind in physical assets but it is moving fast on expertise, generating almost half the worlds top AI researchers (Source: New York Times).

This is not just a blip. Listen to Sam Altman, OpenAI CEO, who sees a future where demand for AI-driven apps is limited only by the amount of computing available at a price the consumer is willing o pay. “Compute is going to be the currency of the future. I think it will be maybe the most precious commodity in the world, and I think we should be investing heavily to make a lot more compute.”

In other words, the scarcest resource is computing to power AI. Meaning that the rise in demand for energy, chips, memory and talent is just the beginning. “There’s a lot of parts of that that are hard. Energy is the hardest part, building data centers is also hard, the supply chain is hard, and then of course, fabricating enough chips is hard. But this seems to be where things are going. We’re going to want an amount of compute that’s just hard to reason about right now.” (Source: Sam Altman on Lex Fridman’s podcast)

Altman is probably the most influential thinker on AI right now, and he has huge skin in the game. His company, OpenAI, is currently duking it out with rivals like Claude.ai. So while it’s great that he does interviews, and that he is thinking about all this, listening to only him and his ilk is like talking to motor car manufacturers a century ago. Of course they’ll be talking about the need for more factories, more components — and more roads. For them, the future was their* technology. They framed problems with the technology in their terms, and painted themselves as both creator and saviour. AI is no different.

So what are the dangers?

Well, I’ve gone into this in past posts, so I won’t rehash them here. The main point is that we simply don’t enough to make sensible decisions on how to approach AI.

Consider the following:

- We still don’t really know how and why AI models work, and we’re increasingly outsource processes of improving AI to the AI itself. Take, for example, Evolutionary Model Merge, an increasingly popular technique to combine multiple existing models to create a new one, adding further layers of complexity and opacity to an already opaque and complex system. “The central insight here,” writes Harry Law in his Learning from Examples newsletter, “is that AI could do a better job of determining which models to merge than humans, especially when it comes to merging across multiple generations of models.”

- We haven’t even agreed on what AI is. There is as yet no science of AI. We don’t agree on definitions. Because of the interdisciplinary nature of AI it can be approached from different angles, with different frameworks, assumptions and objectives. This would be exciting and refreshing were it not simultaneously impacting us all in fast moving ways.

- We don’t really know who owns what. We can only be sure of one thing: the big fellas dominate. As Martin Peers wrote in The Information’s Briefing (sub required):”It is an uncomfortable truth of the technology industry currently that we really have little clue what lies behind some of the world’s most important partnerships. Scared about regulators, Big Tech is avoiding acquisitions by partnerships that “involve access to a lot of the things one gets in acquisitions.” Look at Microsoft’s multi-billion dollar alliance with OpenAI and, more recently, with Inflection AI.

Not only does this concentrate resources in the hands of the few, it also spurs the ‘inevitability of the technology’, driven by those dominant companies who have sunk the most into it driven by dreams of the profitability that may come out of it . In short, we’re in danger of imposing a technological lock-in or path dependence which not only limits the range of technologies on offer, but, more seriously, means the most influential players in AI are those who need some serious returns on their investment, colouring their advice and thought leadership.

We’re throwing everything we’ve got at AI. It’s ultimately a bet: If we throw enough at AI now, it will solve the problems that arise — social, environmental, political, economic — from throwing everything we’ve got at AI.

We’ve been here before

The silly thing about all this is that we’ve been here before. Worrying about where AI may take us is nothing new. AI’s ‘founders’ — folk like John von Neumann, I.J. Good — knew where things were going: science fiction writer and professor Vernon Vinge, who died this month, coined the term singularity, but he was not the first to understand that there will come a point where the intelligence that humans build into machines will outstrip human intelligence and, suddenly and rapidly, leave us in the dust.

Why, then, have we not better prepared ourselves for this moment? Vinge first wrote of this more than 40 years ago. In 1993 he even gave an idea of when it might happen — between 2005 and 2030. Von Neumann, considered the father of AI, saw the possibility in the 1950s, according to Polish-born nuclear physicist Stanislav Ulam, who quoted him as saying:

The ever-accelerating progress of technology and changes in the mode of human life give the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue.

The problem can be explained quite simply: we fetishise technological progress, as if it is itself synonymous with human progress. And so we choose the rosiest part of a technology and ask users: would you not want this? What’s not to like?

The problem is that this is the starting point, the entry drug, the bait and switch, for something far less benevolent.

Economist David McWilliams calls it the dehumanisation of curiosity — as each new social technology wave washes over us, it demands less and less of our cognitive resources until eventually all agency is lost. At first Google search required us to define what it was that we wanted; Facebook et al required us to do define who and what we wanted to share our day with, and Twitter required us to be pithy, thoughtful, incisive, to debate. Tiktok just required us to scroll. At the end it turned out the whole social media thing was not about us creating and sharing wisdom, intelligent content, but for the platforms to outsource the expensive bit — creating entertainment — to those who would be willing to sell themselves, their lives, hawking crap or doing pratfalls.

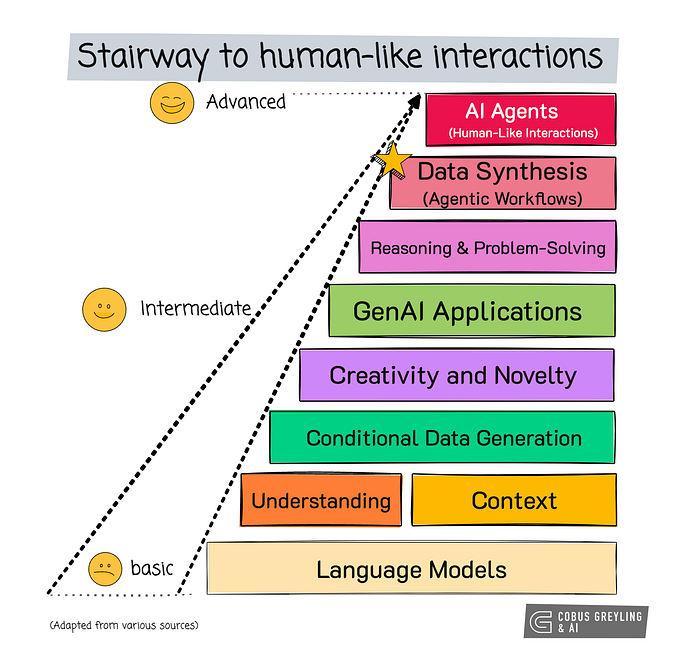

AI has not reached that point. Yet. We’re in this early-Google summer where we have to think about what we want our technology to do for us. The search prompt would sit there awaiting us, cursor blinking, as it does for us in ChatGPT or Claude. But this is just a phase. Generative AI will soon anticipate what we want, or at least a bastardised version of what we want. It will deliver a lowest-common denominator version which, because it doesn’t require us to say it out loud, and so see in text see what a waste of our time we are dedicating to it, strip away while our ability to compute — to think — along with our ability, and desire, to do complex things for which we might be paid a salary or stock options.

AI is, ultimately, just another algorithm.

We need to talk

We need to think hard about not just AI, but what kind of world we want to live in. This needn’t be an airy-fairy discussion, but it has to be one based on a human principles, and a willingness of those we have elected to office to make unpopular decisions. When back in 1945 UK Prime Minister Clement Atlee and his minister Aneurin Bevan sought to build a national health service free to all, they faced significant entrenched opposition — much of it from sources that would later change their mind. The Conservative Party under Winston Churchill voted against it 21 times, with Churchill calling it “a first step to turn Britain into a National Socialist (Nazi) economy.” (A former chairman of the British Medical Association used similar language). Doctors voted against it 10:1. Charities, churches and local authorities fought it.

But Bevan won out, because as a young miner in the slums of southern Wales, he had helped develop the NHS in microcosm: a ‘mutual aid society’ that by 1933 was supplying the medical needs of the 95% of the local population, in return for a subscription of pennies per week. Bevan had seen the future, understood its power, and haggled, cajoled and mobilised until his vision was reality. The result: one of the most popular British institutions, and a clear advantage over countries without a similar system: In 1948 infant mortality in the UK and US was more or less the same — around 33 deaths per 1,000 live births. By 2018 the number had fallen to 3.8 in the UK, but only to 5.7 for the United States. That’s 1.3 million Brits that survived being born, according to my (probably incorrect) maths. (Sources: West End at War, How Labour built the NHS — LSE)

AI is not so simple. Those debating it tend to be those who don’t understand it at all, and those who understand it too well. The former fish for sound-bites while the latter — those who build it — often claim that it is only they who can map our future. What’s missing is a discussion about what we want our technology to do for us. This is not a discussion about AI; it’s a discussion about where we want our world to go. This seems obvious, but nearly always the discussion doesn’t happen — partly because of our technology fetish, but also because entrenched interests will not be honest about what might happen. We’ve never had a proper debate about the pernicious effects of Western-built social media, but our politicians are happy to wave angry fingers at China over TikTok.

Magic minerals and miracle cures

We have a terrible track record of learning enough about a new technology to make an informed decision about what is best for us, instead allowing our system to be bent by powerful lobbies and self-interest. Cars are not the only example. The building industry knew there were health risks associated with asbestos since the 1920s, but it kept it secret and fought regulation. Production of asbestos — once called the ‘magic mineral’ — production kept rising, only peaking in the late 1970s. The industry employed now-familiar tactics to muzzle dissent: “organizing front and public relations companies, influencing the regulatory process, discrediting critics, and manufacturing an alternative science (or history).” (Source: Defending the Indefensible, 2008) As many as 250,000 people still die globally of asbestos-related diseases each year — 25 years after it was banned in the UK.

Another mass killer, thalidomide, was promoted and protected in a similar manner. Its manufacturers, Chemie Grünenthal, “definitely knew about the association of the drug with polyneuritis (damage to the peripheral nervous system)” even before it brought it to market. Ignoring or downplaying reports of problems, the company hired a private detective to discredit critical doctors, and only apologised for producing the drug, and remaining silent about the defects in 2012. (Source: The Thalidomide Catastrophe, 2018)

I could go on: leaded gasoline, CFCs, plastics, agent orange. There are nearly always powerful incentives for companies, governments or armies to use a technology even after they become aware of its side-effects. In the case of tetraethyl lead in cars, the U.S. Public Health Service warned of its menace to public health in 1923, but that didn’t stop Big Oil from setting up a corporation the following year to produce and market leaded gasoline, launching a PR campaign to promote its supposed safety and discredit its critics, while funding research which downplayed health risks and and lobbying against attempts to regulate. It was only banned entirely in 1996.

An AI Buildup

We are now at a key inflection point. We should be closely scrutinising the industry to promote competition, but we should also be looking at harms, and potential harms — to mental health, to employment, to the environment, to privacy, to whether AI represents an existential threat. But instead Big Tech and Big Government are focusing on hoarding resources and funding an ‘AI buildup.’ In other words, just at a time when we should be discussing how to direct and where to circumscribe AI we are locked in an arms race where the goal is to achieve some kind of AI advantage, or supremacy.

AI is not a distant concept. It is fundamentally changing our lives at a clip we’ve never experienced. To allow those developing AI to lead the debate about its future is an error we may not get a chance to correct.